Abstract: Diffusion probabilistic models (DPMs) and their extensions have emerged as competitive generative models yet confront challenges of efficient sampling. We propose a new bilateral denoising diffusion model (BDDM) that parameterizes both the forward and reverse processes with a scheduling network and a score network, which can train with a novel bilateral modeling objective. We show that the new surrogate objective can achieve a lower bound of the log marginal likelihood tighter than a conventional surrogate. We also find that BDDM allows inheriting pre-trained score network parameters from any DPMs and consequently enables speedy and stable learning of the scheduling network and optimization of a noise schedule for sampling. Our experiments demonstrate that BDDMs can generate high-fidelity audio samples with as few as 3 sampling steps. Moreover, comparing to other state-of-the-art diffusion-based neural vocoders, BDDMs produce comparable or higher quality samples indistinguishable from human speech, notably with only 7 sampling steps (143x faster than WaveGrad and 28.6x faster than DiffWave).

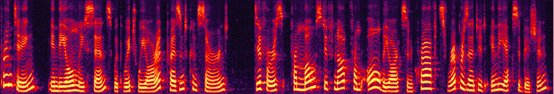

Fast and high-fidelity speech generation using BDDMs:

By introducing a scheduling network optimized with our derived loss, we can generate high-fidelity speech with as few as 3 steps.

Text: Printing, in the only sense with which we are at present concerned, differs from most if not from all the arts and crafts represented in the Exhibition.

| Step 0 (White Noise): | Note: Consider lower volume before listening |

|

|

| Step 1: | |

|

|

| Step 2: | |

|

|

| Step 3: | |

|

LJ speech samples generated from different neural vocoders:

Note: Different rows correspond to different noise schedules or sampling methods for inference.

| Text | Under the new rule visitors were not allowed to pass into the interior of the prison, but were detained between the grating. | This Commission can recommend no procedures for the future protection of our Presidents which will guarantee security. |

| Ground Truth | ||

| WaveNet (MoL) | ||

| WaveGlow | ||

| MelGAN | ||

| HiFi-GAN V1 | ||

| WaveGrad | ||

| DiffWave | ||

| BDDM - 3 steps | ||

| BDDM - 7 steps | ||

| BDDM - 12 steps |

VCTK samples from different generative diffusion models:

Note: Different rows correspond to different noise schedules or sampling methods for inference.

| Text | Frankly, we should all have such problems. | I felt he was excellent. |

| Ground Truth | ||

| DDPM - 8 steps (Grid Search) | ||

| DDPM - 1000 steps (Linear) | ||

| DDIM - 8 steps (Linear) | ||

| DDIM - 100 steps (Linear) | ||

| NE - 8 steps (Linear) | ||

| BDDM - 8 steps |